Grappling with Bayes' Theorem

When you test positive you are probably negative?

Quite possibly. Read why.

Bayes' Theorem is a formula which can be used to calculate the chances of something being true. The chance of something is its probability, so Bayes is about statistics.

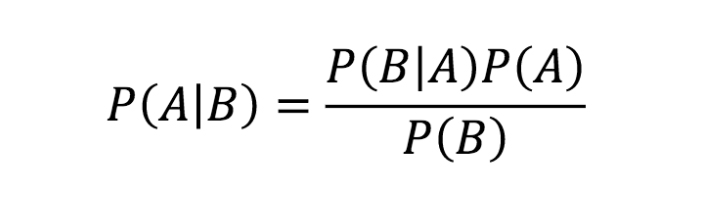

BAYES' THEOREM

P is probability, A and B are things that have been observed. The probability of (A given B) (the answer) is the probability of (B given A) multiplied by the independent probability of A all then divided by the independent probability of B.

(1) Proving Bayes' Theorem

To understand Bayes' Theorem, it seems useful to begin by proving it. This page does that I think. (2) and (3) then show examples of 'Bayes in action'.

W x X = Y x Z

Even I know that if W x X = Y x Z then W = (Y x Z) divided by X. It's algebra.

3 = (2 x 6) / 4

or:

[ Bayes' formula ]

This is exactly the same formula as W = (Y x Z) divided by X above but instead of W (3 say) you use P(A|B) (3% say). It looks more complicated but it isn't. It's still just one number, but a percentage – a percentage probability.

But this doesn't prove Bayes' Theorem.

To continue the proof » A is smoke, B is fire.

The following percentage 'prior' probabilities were plucked from the air – one per variable in the formula. Nothing is actually being calculated. It is necessary to have all four 'priors' in order to prove the theorem but normally you would have only three 'priors' and the one you don't know is what you're calculating.

- W is the probability of smoke if there's fire (50% say): P(A|B). There often is – fires tend to produce smoke.

- X is the probability of fire regardless of smoke (5% say): P(B). This is the straight chance of a fire.

- Y is the probability of fire if there's smoke (25% say): P(B|A). There may not be fire because 'smoke' has other causes than fire.

- Z is the probability of smoke regardless of fire (10% say): P(A). This is the straight chance of smoke.

(1) The probability of smoke AND fire together is Z times (X given smoke), i.e. an only 10% probability of smoke multiplied by a 25% probability of fire if there's smoke (Z times Y).

(2) The probability of smoke AND fire together is also X times (Z given fire), i.e. the small 5% probability of fire multiplied by the high 50% probability of smoke if there's fire (X times W).

Assume you sometimes look out of the window.

The chance of smoke and fire together is a 1 in 10 chance of smoke multiplied by a 1 in 4 chance of fire if there happens to be smoke, or 10% x 25% = 2.5%, or once every 40 times you look out of the window.

So if you look out of the window on ten different occasions you will probably see smoke once. When you do, three times out of four it doesn't mean there's a fire because there probably isn't. It's just smoke by itself.

It might mean a fire however. The chance of fire and smoke together is the same as the chance of smoke and fire together: a 1 in 20 chance of a fire multiplied by a 1 in 2 chance of smoke with a fire, or 5% x 50% = 2.5%, or once every 40 times you look out of the window.

Both of those hypothesize smoke and fire together and the result is the same. You can therefore equate Z times Y with X times W – the same as P(A) x P(B|A) = P(B) x P(A|B) – or – P(A|B) = P(B|A) x P(A) all divided by P(B):– the theorem.

I think the proof of Bayes' Theorem lies in the fact that, as in the 'smoke and fire together' example, you can calculate the probability of the same thing two different ways. You can then put them on opposite sides of an equation and convert it into the Bayes' Theorem by moving a multiplying value P(B) on one side into a dividing value on the other. You then have Bayes' Theorem.

P(A|B) x P(B) = P(B|A) x P(A)

becomes

P(A|B) = P(B|A) x P(A) / P(B)

It would just be nice to be able to explain it in plain English, but I can't, and I haven't seen it done anywhere else either.

When would you use Bayes' Theorem? (perhaps not the formula but at least the reasoning behind it) You are not going to get your calculator every time you want to know the chance of something happening or if something is true.

It's for when you observe something or are told something (an 'event') and you need to know how true it might be. The danger with so-called 'facts' is that humans tend to make assumptions based on what they already believe as fact. This is known as a 'prior' in Bayesian reasoning. So check your beliefs because you need the 'prior' to be true, not something you happen to believe for all kinds of good or stupid reasons. Or perhaps you don't already believe anything and when an 'event' occurs, you have no 'prior' to go off except the event. "Gosh, is this true?"

(2) Checking if something is true

New evidence that 'something' is true depends on how much it changes its (prior) probability BEFORE new evidence was found, i.e. the likelihood the 'something' being true in the first place (eg the 'background' level). If a test is 90% accurate, it DOES NOT MEAN a 90% likelihood the 'something' is true.

Suppose 1% of the population has a condition and the other 99% doesn't. A diagnostic screening test exists which is 90% accurate (the 'true positive' rate) and wrong 9% of the time (the 'false positive' rate). If someone tests positive, what are the chances they have the condition? This is P(A|B) in the formula: the answer to the question. Is it 90%?

In reality there's a 90% chance that they haven't and that's because of the 'prior' base rate of only 1% of the population having the condition in the first place. The 'prior' base rate is P(A) in the formula – 0.01 (1%).

The formula again:

P(A|B) = P(B|A) x P(A) / P(B)

P(B|A) in the formula is 90% – 0.9 – the accuracy of the test when someone has the condition.

Then there's the probability of a positive test across the whole population regardless of whether the result is right or wrong. This is P(B) in the formula and is the total of (1) the 'true positives' in the 1% of the population who have the condition plus (2) the 'false positives' in the 99% of the population who don't. The figure is 90% of 1% (0.009) + 9% of 99% (0.0891) which adds up to 0.0981 – near enough to 0.1.

0.9 x 0.01

–––––––––––

0.1

- Chance is the desired answer, i.e. the 'odds' of a positive diagnosis being correct for anyone in the population.

- 90% is the chance of a correct diagnosis for someone with the condition.

- 1% is the base rate, i.e. the percentage of people in the population who have the condition.

- 10% is the calculated chance of a positive test across the whole population regardless of whether the result is correct or not.

Chance = 90% x 1% / 10%

The correct answer is not 90% but only 9% – P(A|B) in the formula (the actual probability of a positive test being right). The 'priors' are (i) the 1% base rate of 'true positives' in the whole population and (ii) the only 90% accuracy of the test in terms of 'true positives' and 'false positives'.

Summary:

- 1% of the population is known to have a particular condition.

- A test for the condition is 90% accurate in detecting it.

- A screening exercise of 1,000 people is done using the test.

- The test correctly detects the condition in 8 of those people.

- The test wrongly detects the condition in 88 of those people.

- The chance of a positive test being correct is 9%, not 90%.

(3) A 'likely culprit' scenario

- One night, in a town, a hit-and-run accident occurs involving a delivery van.

- The town has two delivery firms, one with red vans, the other with orange vans.

- 85% of the vans are red, 15% are orange, signifying 'whose vans is whose'.

- A woman witnessed the accident, identifying the van involved as orange in colour.

- In tests, the woman correctly identified a delivery van's colour 80% of the time.

The question is which delivery firm's driver caused the accident given that the woman's accuracy rate in correctly identifying the colour is 80%.

At first glance it perhaps seems reasonable to assume that if she is right 80% of the time and she said the van was orange, then it probably was orange – an 80% chance. In fact it is more likely to be red.

Assuming the 85%; / 15% ratio, the chance of it being orange is only 41%, not 80% as one might assume to begin with. A key thing to grasp with Bayes is the effect of false-positives (errors) and the base rate fallasy.

The woman correctly identifies a delivery van as orange some of the time but she also incorrectly identifies a red one as orange (a false positive) even more times. That's because there are far more red ones, increasing her chance of an error to well over 20% and, by the same token, reducing her success rate from 80% to 41%. That's a success rate of only 41% when she is 80% accurate, so she's probably wrong. Success rate is not the same thing as accuracy.

Calculation:

- The 'prior' chance of the woman correctly identifying an orange van is 15% (orange vans) x 80% (accuracy) = 12% 'true positives'.

- The 'prior' chance of the woman incorrectly identifying an orange van from the red vans is 85% (red vans) x 20% (error rate) = 17% 'false positives'.

- The 'prior' chance of the woman identifying any van as orange is the total orange identifications, right or wrong, i.e. 12% + 17% = 29%.

- Using Bayes' formula, the chance of the woman being right about the accident van being orange is 12% divided by 29% = 41% (about).

- Good luck with all that.

Chance = (15% x 80%) / 29%

What if she said it was red? There are far more red delivery vans than orange ones so she's more likely to be right before she even says anything. According to my calculations the chance of her being right in this instance increases from 41% to almost 96%. The chance is was red started off at 85% anyway (by the much bigger number of red vans) so what she said doesn't make much difference. It hardly seems worth asking the question.

Something seemingly unusual happens. The first question is how unusual it is actually? The second question is not what is the most likely explanation for it but how likely is any particular explanation compared to any other.

It seems to me that the Bayes' Theorem formula is for statisticians. It would be hard for a normal person to work out what 'priors' to feed in, let alone obtain accurate information about rates, probabilities etc, and then use the formula. The value of Bayes to a normal person is perhaps just as a gentle reminder that things may not be as they seem initially once the situation that existed 'before the event' is fed in to the thinking. The main thing is think!

Thomas Bayes was not formally trained as a mathematician but was a skilled amateur having written a book in his thirties about calculus. He studied logic and theology at the University of Edinburgh and was later ordained to become Minister at the Presbyterian Chapel in Tunbridge Wells in 1733. He died in 1761. His work on probability was publicised only after his death by Richard Price, a fellow clergyman who was also a pioneer insurance statistician.

I am by no means a statistician or a mathematician but I did Pure Maths with Statistics at A-level – and failed!

I know there are hundreds of web pages and books about Bayes' Theorem.

I wrote this page only because I know it's important but I couldn't quite see how it works (though I saw the general principle). When you try to explain something you don't quite understand to someone else who doesn't (or isn't that interested) you realise you have to understand it first. I do now.

Articles »